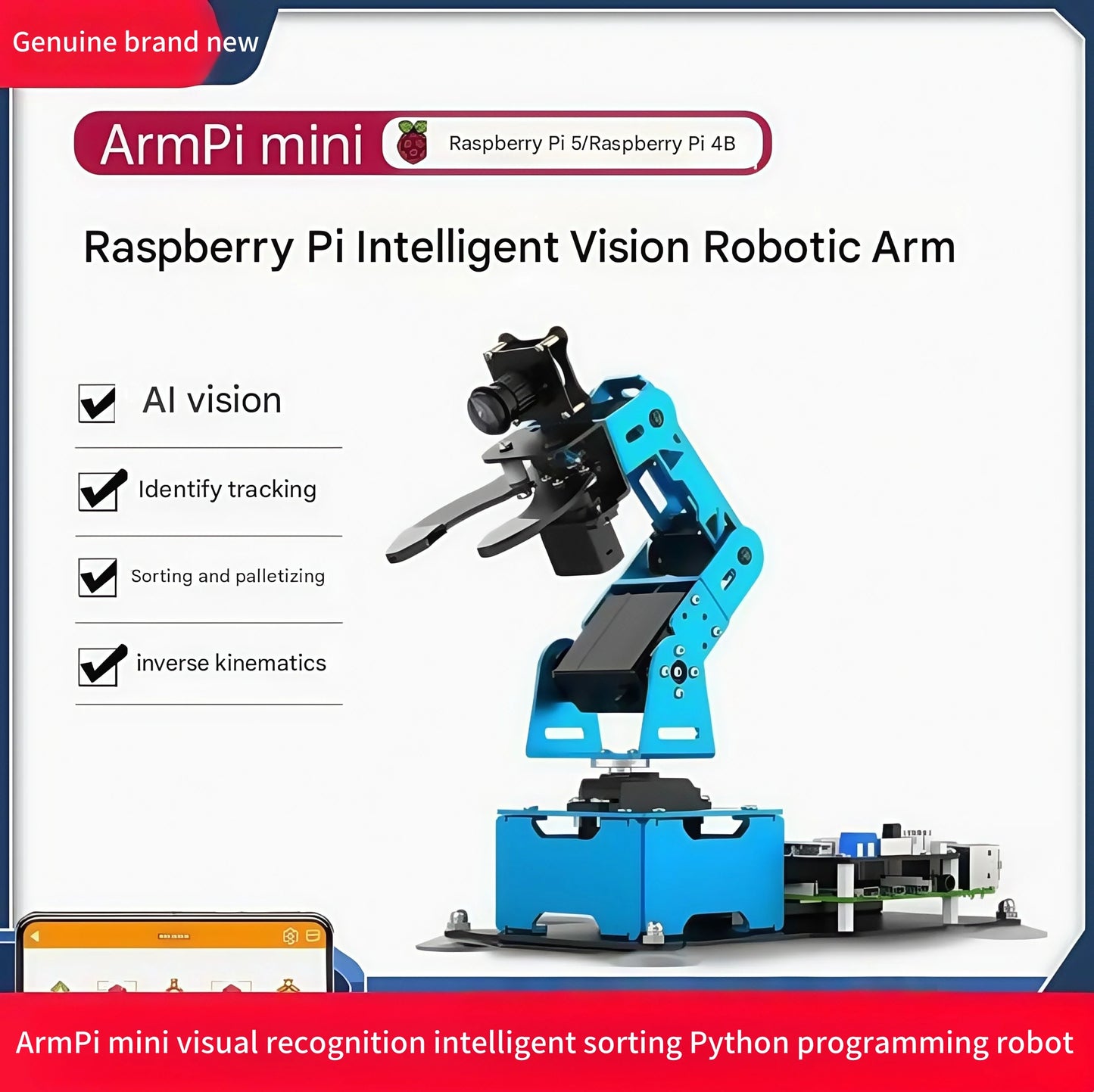

Visual Recognition Robotic Arm with Intelligent Sorting

Visual Recognition Robotic Arm with Intelligent Sorting

Couldn't load pickup availability

visual recognition robotic arm turns a desktop into a smart workcell—see, decide, and pick with calm precision. Mount the camera, place a tray, and tap start: the system detects parts by color, shape, or marker, then plans a collision-aware path to grip and place. A rigid frame, smooth servos, and encoded joints keep motion repeatable, while soft-touch fingertips and adjustable force protect delicate items. On screen you get a live view with overlays for detections, pick points, and confidence, so what the robot “sees” is exactly what you see—making visual recognition robotic arm easy to trust from day one.

Setup is simple. A guided wizard calibrates camera to arm in minutes, loads sample datasets, and walks you through first picks with blocks, caps, or components. Drag-and-drop flow blocks handle “detect → pick → place,” while advanced users jump to Python or ROS to fine-tune thresholds, add sorting logic, or log images for retraining. Quick-swap toolheads—two-finger gripper, suction cup, or pen holder—let you change tasks without rebuilding the program, and conveyor/bin add-ons turn demos into light kitting lines.

Safety and care are built in. Torque limits, software travel bounds, and an e-stop keep sessions calm; fallbacks pause the cycle if confidence drops or a part shifts. Maintenance is minimal: keep lenses clean, check fasteners monthly, and re-run calibration after transport. For classrooms, maker labs, and prototyping benches, visual recognition robotic arm delivers approachable computer vision, steady manipulation, and that “it just picked it” moment that makes automation click.

Share